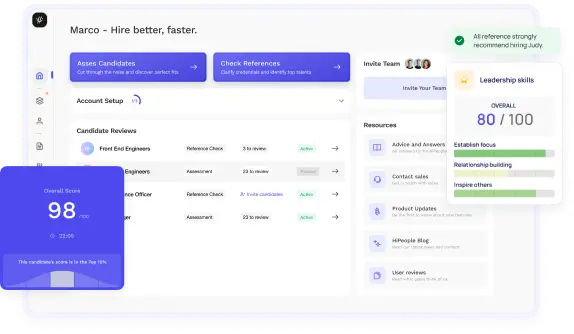

Streamline hiring with effortless screening tools

Optimise your hiring process with HiPeople's AI assessments and reference checks.

Curious about what it takes to excel in data science interviews? Unveil the secrets to mastering the art of answering data science interview questions with confidence and finesse. Dive deep into the realms of technical prowess, problem-solving acumen, and soft skills indispensably sought after in the data science landscape. Whether you're a seasoned data scientist aiming to brush up on your interview skills or a budding enthusiast preparing to venture into the realm of data science, this guide is your go-to resource for navigating the intricacies of data science interview questions.

Data science is an interdisciplinary field that combines domain knowledge, statistical analysis, machine learning, and programming to extract insights and knowledge from structured and unstructured data. Data scientists leverage data-driven approaches to solve complex problems, make informed decisions, and uncover hidden patterns and trends in large datasets. The field of data science encompasses a wide range of techniques and methodologies, including data mining, predictive modeling, natural language processing, and deep learning, applied across various industries and domains to drive innovation and drive business value.

Data science interview questions are designed to assess a candidate's technical skills, problem-solving abilities, domain knowledge, and soft skills relevant to the field of data science. These questions cover a broad range of topics, including data cleaning and preprocessing, exploratory data analysis, machine learning algorithms, big data technologies, and real-world data science scenarios. Data science interview questions may take the form of coding challenges, case studies, behavioral assessments, or technical discussions, depending on the organization and the specific role. Candidates are expected to demonstrate their proficiency in data manipulation, statistical analysis, predictive modeling, and communication, as well as their ability to apply technical knowledge to solve practical problems and drive business impact.

Data science interviews play a crucial role in the hiring process for data science roles, serving as a means for employers to evaluate candidates' suitability and competency for the position. Here are some key reasons why data science interviews are important:

Data science interviews provide employers with valuable insights into candidates' skills, competencies, and potential for success in data science roles, helping them make informed hiring decisions and build high-performing data science teams.

Data science interviews come in various formats, each designed to assess different aspects of a candidate's skills and competencies. Before diving into preparation, it's essential to understand the landscape of data science interviews.

How to Answer: Explain the concept of regularization in machine learning, its purpose in mitigating overfitting, and the common types such as L1 (Lasso) and L2 (Ridge) regularization. Discuss how regularization adds a penalty term to the loss function to prevent model complexity.

Sample Answer: "Regularization in machine learning is a technique used to prevent overfitting by adding a penalty term to the model's loss function, discouraging overly complex models. It works by introducing a cost associated with large coefficients, thus encouraging the model to favor simpler solutions. L1 regularization (Lasso) adds the absolute value of coefficients, leading to sparse solutions, while L2 regularization (Ridge) adds the squared magnitude of coefficients. Both techniques help in controlling the model's complexity and improving its generalization performance."

What to Look For: Look for candidates who demonstrate a clear understanding of regularization techniques, their purpose, and how they are applied in machine learning models. Strong candidates will be able to explain the differences between L1 and L2 regularization and articulate why regularization is crucial for model performance.

How to Answer: Define the bias-variance tradeoff and its significance in machine learning model performance. Describe how bias refers to the error introduced by approximating a real problem with a simplified model, while variance refers to the model's sensitivity to fluctuations in the training data. Discuss how finding the right balance between bias and variance is essential for optimal model performance.

Sample Answer: "The bias-variance tradeoff is a fundamental concept in machine learning that illustrates the tradeoff between bias and variance in model performance. Bias refers to the error introduced by approximating a real problem with a simplified model, leading to underfitting, while variance refers to the model's sensitivity to fluctuations in the training data, leading to overfitting. A high-bias model tends to underfit the data, while a high-variance model tends to overfit. Finding the right balance between bias and variance is crucial for achieving optimal model performance."

What to Look For: Seek candidates who can articulate a clear understanding of the bias-variance tradeoff and its implications for model performance. Look for examples or analogies that demonstrate their comprehension of how adjusting model complexity affects bias and variance.

How to Answer: Define supervised and unsupervised learning and explain the key differences between them. Discuss how supervised learning involves training a model on labeled data with input-output pairs, while unsupervised learning deals with unlabeled data and seeks to uncover hidden patterns or structures.

Sample Answer: "Supervised learning involves training a model on labeled data, where the algorithm learns from input-output pairs to make predictions or classify new data points. In contrast, unsupervised learning deals with unlabeled data, where the algorithm seeks to find hidden patterns or structures without explicit guidance. Supervised learning tasks include regression and classification, while unsupervised learning tasks include clustering and dimensionality reduction."

What to Look For: Look for candidates who can clearly distinguish between supervised and unsupervised learning, demonstrating an understanding of their respective purposes and applications. Strong candidates will provide examples of real-world tasks that fall under each category.

How to Answer: Differentiate between classification and regression tasks in machine learning. Explain how classification involves predicting discrete labels or categories, while regression entails predicting continuous numerical values. Discuss the types of algorithms commonly used for each task, such as logistic regression for classification and linear regression for regression.

Sample Answer: "Classification and regression are two fundamental types of supervised learning tasks in machine learning. Classification involves predicting discrete labels or categories for input data, such as classifying emails as spam or non-spam. Regression, on the other hand, entails predicting continuous numerical values, such as predicting house prices based on features like square footage and location. Classification tasks often utilize algorithms like logistic regression or decision trees, while regression tasks commonly employ linear regression or support vector machines."

What to Look For: Seek candidates who can clearly articulate the distinctions between classification and regression tasks, including the types of data they deal with and the algorithms typically used for each task. Look for examples that demonstrate their understanding of real-world applications for both classification and regression.

How to Answer: Define feature scaling and discuss its importance in machine learning. Explain how feature scaling standardizes the range of independent variables to ensure that no single feature dominates the others. Mention common techniques such as Min-Max scaling and standardization.

Sample Answer: "Feature scaling is a preprocessing technique used to standardize the range of independent variables or features in machine learning datasets. It's important because it ensures that no single feature dominates the others due to differences in scale or units. Common methods of feature scaling include Min-Max scaling, which rescales features to a fixed range, and standardization, which scales features to have a mean of zero and a standard deviation of one."

What to Look For: Look for candidates who can explain the purpose of feature scaling and its impact on machine learning models. Strong candidates will be able to discuss different scaling techniques and when to use them based on the characteristics of the data.

How to Answer: Define categorical variables and explain how they represent qualitative data with discrete categories or levels. Discuss strategies for handling categorical variables in machine learning models, such as one-hot encoding or label encoding.

Sample Answer: "Categorical variables are variables that represent qualitative data with discrete categories or levels. Examples include gender, color, or product type. In machine learning, categorical variables need to be converted into a numerical format that algorithms can process. One common approach is one-hot encoding, which creates binary columns for each category, indicating its presence or absence in the data. Another approach is label encoding, which assigns a unique numerical label to each category."

What to Look For: Seek candidates who can clearly define categorical variables and explain how they are handled in machine learning models. Look for an understanding of the advantages and disadvantages of different encoding techniques and when to use each method.

How to Answer: Discuss the significance of data visualization in data science and analytics. Explain how visual representations of data help in gaining insights, identifying patterns, and communicating findings effectively to stakeholders.

Sample Answer: "Data visualization plays a crucial role in data science by making complex datasets more accessible and understandable. Visualizations help in exploring data, identifying patterns, trends, and outliers that may not be apparent from raw data alone. They also facilitate effective communication of insights to stakeholders, enabling data-driven decision-making. Visualizations can range from simple charts and graphs to interactive dashboards, providing different levels of detail and interactivity."

What to Look For: Look for candidates who can articulate the importance of data visualization in data science projects. Strong candidates will provide examples of how visualizations can enhance data exploration, analysis, and communication of findings to diverse audiences.

How to Answer: Identify the essential elements of effective data visualizations. Discuss aspects such as clarity, accuracy, relevance, and aesthetics. Explain how choosing the right type of visualization for the data and audience is critical for conveying insights effectively.

Sample Answer: "A good data visualization should be clear, accurate, relevant, and visually appealing. Clarity ensures that the message conveyed by the visualization is easy to understand, with clear labels, titles, and legends. Accuracy is crucial to represent data truthfully and avoid misleading interpretations. Relevance ensures that the visualization addresses the intended questions or objectives of the analysis. Aesthetics, including color choices and layout, can enhance the visual appeal and engagement of the audience. Additionally, selecting the appropriate type of visualization based on the data's characteristics and the audience's preferences is essential for effective communication."

What to Look For: Seek candidates who can identify the key components of effective data visualizations and explain why each component is important. Look for examples or insights into how they prioritize clarity, accuracy, relevance, and aesthetics in their visualization practices.

How to Answer: Discuss various ethical considerations that may arise in data science projects, such as privacy, fairness, transparency, and accountability. Provide examples of how biased data or algorithms can lead to unintended consequences or perpetuate societal inequalities.

Sample Answer: "Ethical considerations in data science projects are paramount, as they can have significant implications for individuals, communities, and society as a whole. Privacy concerns arise when handling sensitive or personally identifiable information, requiring measures to protect data confidentiality and security. Fairness is crucial to ensure that algorithms do not discriminate against certain groups or individuals based on protected characteristics such as race, gender, or ethnicity. Transparency involves disclosing the data sources, methods, and assumptions underlying the analysis to promote accountability and trust. Additionally, it's essential to consider the potential impact of data and algorithms on vulnerable populations and to mitigate biases that may exacerbate existing inequalities."

What to Look For: Look for candidates who demonstrate an awareness of ethical considerations in data science projects and can discuss strategies for addressing them. Strong candidates will provide examples or scenarios illustrating how they navigate ethical challenges in their work.

How to Answer: Explain techniques for detecting and mitigating bias in machine learning models. Discuss approaches such as bias assessment during data collection and preprocessing, fairness-aware algorithms, and post-hoc bias mitigation strategies.

Sample Answer: "Identifying and mitigating bias in machine learning models requires a multifaceted approach throughout the entire data science pipeline. It starts with conducting thorough bias assessments during data collection and preprocessing to identify potential sources of bias. Fairness-aware algorithms, which aim to minimize disparate impacts on different demographic groups, can be employed during model training. Post-hoc bias mitigation techniques, such as reweighting or re-sampling biased training data, can also help address bias in existing models. Additionally, ongoing monitoring and evaluation of model performance for fairness and equity are essential to ensure that biases are continuously identified and mitigated."

What to Look For: Look for candidates who demonstrate a comprehensive understanding of bias mitigation techniques in machine learning models. Strong candidates will discuss proactive measures taken at various stages of the data science pipeline to address bias and promote fairness.

How to Answer: Explain the concepts of batch processing and stream processing in the context of big data systems. Discuss how batch processing involves processing data in large, discrete chunks or batches, while stream processing deals with continuous, real-time data streams.

Sample Answer: "Batch processing and stream processing are two fundamental approaches to handling data in big data systems. Batch processing involves processing data in large, discrete chunks or batches, typically collected over a period of time. This approach is well-suited for tasks that can tolerate some latency, such as offline analytics or scheduled reports. Stream processing, on the other hand, deals with continuous, real-time data streams, processing data as it arrives. Stream processing is essential for applications requiring low-latency responses, such as real-time monitoring, fraud detection, or IoT data processing."

What to Look For: Look for candidates who can articulate the differences between batch processing and stream processing in the context of big data systems. Strong candidates will provide examples of use cases for each approach and discuss their respective advantages and challenges.

How to Answer: Describe the role of distributed computing frameworks such as Apache Hadoop and Apache Spark in facilitating big data processing. Explain how these frameworks distribute data and computation across multiple nodes in a cluster, enabling parallel processing and fault tolerance.

Sample Answer: "Distributed computing frameworks like Apache Hadoop and Apache Spark play a crucial role in enabling big data processing at scale. These frameworks distribute data and computation across multiple nodes in a cluster, allowing for parallel processing of large datasets. Apache Hadoop, with its Hadoop Distributed File System (HDFS) and MapReduce programming model, pioneered the distributed processing of big data. Apache Spark builds upon this foundation by introducing in-memory processing and a more versatile programming model, making it faster and more efficient for a wide range of data processing tasks. Both frameworks provide fault tolerance mechanisms to ensure reliable processing in distributed environments."

What to Look For: Seek candidates who can explain how distributed computing frameworks enable big data processing and discuss the features and advantages of platforms like Apache Hadoop and Apache Spark. Look for insights into how these frameworks address challenges such as scalability and fault tolerance.

How to Answer: Identify and discuss the main challenges encountered in natural language processing tasks. Topics may include ambiguity, context understanding, language variability, and domain-specific language.

Sample Answer: "Natural language processing (NLP) tasks face several challenges that arise from the complexity and variability of human language. Ambiguity is a significant challenge, as words and phrases can have multiple meanings depending on context. Understanding context is essential for tasks like sentiment analysis or named entity recognition, where the meaning of words or phrases may change based on the surrounding context. Language variability, including slang, dialects, and grammatical variations, adds another layer of complexity to NLP tasks. Additionally, domain-specific language presents challenges when applying NLP techniques to specialized domains such as healthcare or finance."

What to Look For: Look for candidates who can identify and articulate the challenges inherent in natural language processing tasks. Strong candidates will provide examples and insights into how these challenges impact the development and deployment of NLP models.

How to Answer: Explain the concept of word embeddings and how techniques like Word2Vec and GloVe improve natural language processing tasks. Discuss how word embeddings represent words as dense vectors in a continuous space, capturing semantic relationships and context.

Sample Answer: "Word embeddings are dense vector representations of words in a continuous space, learned from large corpora of text data. Techniques like Word2Vec and GloVe are popular methods for generating word embeddings and improving natural language processing tasks. These embeddings capture semantic relationships between words by placing similar words closer together in the vector space. This allows NLP models to leverage contextual information and semantic similarities between words, leading to better performance in tasks like text classification, sentiment analysis, and machine translation."

What to Look For: Seek candidates who can explain the concept of word embeddings and how they enhance NLP tasks. Look for examples or illustrations of how word embeddings capture semantic relationships and improve the performance of NLP models.

How to Answer: Identify and discuss the key components of a time series data, including trend, seasonality, cyclic patterns, and irregular fluctuations.

Sample Answer: "A time series consists of four main components: trend, seasonality, cyclic patterns, and irregular fluctuations. The trend component represents the long-term direction or movement of the data, indicating whether it is increasing, decreasing, or remaining relatively stable over time. Seasonality refers to repetitive patterns or fluctuations that occur at fixed intervals, such as daily, weekly, or yearly cycles. Cyclic patterns are similar to seasonality but occur at irregular intervals and may not have fixed periods. Irregular fluctuations, also known as noise or residuals, represent random variations or disturbances in the data that cannot be attributed to the other components."

What to Look For: Look for candidates who can identify and explain the key components of a time series and their characteristics. Strong candidates will demonstrate an understanding of how these components contribute to the overall behavior of time series data.

Looking to ace your next job interview? We've got you covered! Download our free PDF with the top 50 interview questions to prepare comprehensively and confidently. These questions are curated by industry experts to give you the edge you need.

Don't miss out on this opportunity to boost your interview skills. Get your free copy now!

Technical data science interview topics form the core of the assessment process, evaluating candidates' proficiency in various aspects of data manipulation, analysis, and modeling. Let's delve into each of these topics to understand what they entail and how candidates can prepare effectively.

Data cleaning and preprocessing are essential steps in the data science workflow, ensuring that datasets are clean, consistent, and ready for analysis.

Exploratory data analysis involves examining and visualizing datasets to understand their underlying structure, patterns, and relationships.

Machine learning forms the backbone of many data science applications, enabling algorithms to learn from data and make predictions or decisions.

Deep learning is a subfield of machine learning that focuses on training neural networks to learn from large volumes of data.

With the increasing volume and complexity of data, big data technologies are essential for processing and analyzing large datasets efficiently.

In addition to technical proficiency, data science interviews also assess candidates' behavioral traits and their ability to apply technical knowledge to real-world scenarios. Let's explore the key topics covered in behavioral and case study interviews and how candidates can effectively prepare for them.

Problem-solving and critical thinking are essential skills for data scientists, enabling them to approach complex challenges methodically and develop innovative solutions.

Effective communication and collaboration are critical for data scientists to convey their findings to stakeholders, work in cross-functional teams, and drive consensus around data-driven decisions.

Case study interviews present candidates with real-world data science scenarios or problems and assess their ability to apply technical knowledge to practical situations.

Data science applications vary across industries, with each sector posing unique challenges and opportunities for analysis and insights. Let's explore the specific topics covered in data science interviews within various industries and how candidates can prepare for them effectively.

In the finance and banking sector, data science is instrumental in risk management, fraud detection, customer segmentation, and algorithmic trading.

In healthcare, data science is used for patient diagnosis, treatment optimization, drug discovery, and health outcomes research.

In the e-commerce and retail sector, data science is used for customer segmentation, personalized recommendations, demand forecasting, and inventory optimization.

In the technology and software development sector, data science is used for product analytics, user behavior analysis, software optimization, and performance monitoring.

Soft skills are becoming increasingly important in the field of data science, complementing technical expertise and enhancing overall effectiveness in the workplace. Let's explore the key soft skills and personal development areas that are relevant for data science roles.

Leadership and teamwork are essential for data scientists to collaborate effectively with cross-functional teams and drive projects to successful outcomes.

Data science is a rapidly evolving field, with new technologies, tools, and methodologies emerging regularly. Candidates should demonstrate adaptability and a commitment to continuous learning to stay abreast of the latest developments and remain competitive in the industry.

Ethical considerations are paramount in data science, given the potential impact of data-driven decisions on individuals, organizations, and society at large. Candidates should demonstrate an awareness of ethical principles and a commitment to upholding ethical standards in their work.

Preparation is key to success in data science interviews. Here are some essential tips to help you effectively prepare and ace your next interview:

Mastering data science interview questions is not just about technical proficiency; it's about a holistic approach that combines technical skills, problem-solving abilities, and effective communication. By understanding the different types of interview questions, preparing thoroughly, and showcasing your skills with confidence, you can increase your chances of success in landing your dream data science role.

Remember, each interview is an opportunity to learn and grow, regardless of the outcome. Use feedback from interviews to identify areas for improvement, continue honing your skills, and stay curious about the ever-evolving field of data science. With dedication, practice, and a positive mindset, you can conquer data science interviews and embark on a rewarding career journey in the dynamic world of data science.