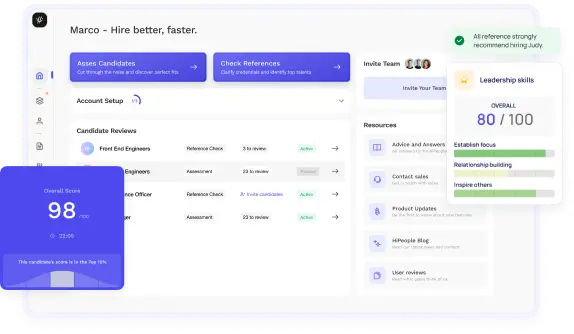

Streamline hiring with effortless screening tools

Optimise your hiring process with HiPeople's AI assessments and reference checks.

Whether you are a beginner or an experienced professional, this guide will equip you with the knowledge and confidence to excel in your Kubernetes interviews. Throughout this guide, we will explore the fundamental concepts, architecture, management, networking, security, monitoring, storage, advanced topics, common challenges, and the future of Kubernetes.

Kubernetes has revolutionized the world of container orchestration and has become the de facto standard for managing containerized applications. Before diving into the interview questions, let's briefly understand what Kubernetes is and why it's essential in modern IT infrastructure.

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform developed by Google. It automates the deployment, scaling, and management of containerized applications. With Kubernetes, you can abstract the underlying infrastructure, making it easier to deploy and run applications in a consistent and scalable manner.

Kubernetes brings numerous benefits to the table, making it a critical technology for organizations embracing cloud-native applications. Here's why Kubernetes is essential in modern IT infrastructure:

At its core, Kubernetes operates on a cluster of nodes, where each node is a virtual or physical machine. The key components of Kubernetes include:

In this section, we will delve deeper into the architecture of Kubernetes and explore its core components.

Kubernetes follows a distributed architecture, with each cluster consisting of one or more master nodes and multiple worker nodes. The master node is responsible for managing the cluster, while the worker nodes host the actual containers.

The Kubernetes control plane is the brain of the cluster, comprising various components that collaborate to make the cluster function cohesively. These components include:

etcd is a distributed, consistent key-value store used to store the cluster's configuration data. It acts as the primary datastore for Kubernetes and ensures that the cluster remains resilient to failures.

Kubernetes supports various container runtimes, with Docker being the most commonly used. Container images, which include the application and its dependencies, are pulled from container registries and run within containers on the worker nodes.

CNI is an essential component of Kubernetes networking that allows different container runtimes to use various networking solutions. It ensures seamless communication between containers across the cluster.

CSI is a standard that enables Kubernetes to work with different storage systems. It simplifies storage integration with Kubernetes, making it easier to use different storage solutions for persistent volumes.

Understanding core Kubernetes concepts is vital for any Kubernetes interview. Let's explore these concepts in detail.

A Pod is the smallest deployable unit in Kubernetes, representing one or more containers that are scheduled together on the same worker node. Containers within a Pod share the same network namespace and can communicate via localhost.

Deployments and ReplicaSets are higher-level abstractions that enable you to manage the desired state of replicated Pods. Deployments provide declarative updates to Pods, making it easier to manage rolling updates and rollbacks.

StatefulSets are used for managing stateful applications in Kubernetes. They ensure that each Pod has a stable, unique identity and stable network identity, which is critical for stateful applications.

DaemonSets ensure that a copy of a specific Pod is running on each node in the cluster, making them ideal for running monitoring agents or networking daemons. On the other hand, Jobs are used for running batch tasks to completion.

Kubernetes Services abstract the underlying network and provide a stable IP address and DNS name to access Pods. They enable seamless communication between different parts of your application.

Ingress Controllers manage incoming traffic to your cluster, acting as the entry point for external requests. Ingress Resources define the rules for routing incoming traffic to different services in the cluster.

ConfigMaps allow you to decouple configuration data from your containerized application, making it easier to manage configurations separately. Secrets, on the other hand, securely store sensitive data, such as passwords and API keys.

Persistent Volumes (PVs) are storage volumes that exist beyond the lifecycle of a Pod. Persistent Volume Claims (PVCs) are requests for specific storage resources by Pods. PVCs bind to PVs, providing data persistence for applications.

Namespaces provide an isolated environment for your resources within a cluster, preventing naming conflicts. Resource Quotas allow you to limit the amount of resources that can be consumed within a namespace.

CRDs enable you to define custom resources and their behavior in Kubernetes. Operators are Kubernetes controllers that use CRDs to automate complex application management tasks.

Now that we have a solid understanding of core Kubernetes concepts, let's explore how to effectively manage Kubernetes resources.

To create and manage Pods, you need to define a Pod manifest in a YAML file and use the kubectl command-line tool to apply it to the cluster. The manifest includes details like the container image, resource limits, environment variables, and more.

Deployments enable you to declaratively manage the desired state of your application. Here's how you can use Deployments:

Horizontal Pod Autoscaler (HPA) automatically scales the number of Pods based on CPU utilization or custom metrics. To use HPA:

Rolling updates allow you to update your application without downtime by gradually replacing old Pods with new ones. In case of any issues, you can perform rollbacks to the previous version.

ConfigMaps and Secrets allow you to manage configuration data and sensitive information separately from your application code. Here's how to use them:

Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) are essential for handling data in Kubernetes. Here's how to work with them:

Kubernetes schedules Pods on worker nodes based on resource requirements, node availability, and other constraints. The scheduler continuously monitors the cluster to maintain the desired state.

Kubernetes networking plays a crucial role in ensuring seamless communication between Pods and services within the cluster. Let's dive into various networking concepts in Kubernetes.

In a Kubernetes cluster, each node has its IP address range for Pods. Containers within a Pod can communicate with each other using localhost, while Pods across nodes can communicate through the Pod network.

Kubernetes Services enable communication between different parts of your application within the cluster. There are three types of Services:

Headless Services do not allocate a virtual IP and do not load balance. Instead, they return the Pod's individual IP addresses directly. They are useful for stateful applications that require direct communication with specific Pods.

Kubernetes provides DNS-based service discovery, allowing Pods to locate and communicate with Services using their DNS names. This mechanism simplifies communication between different services within the cluster.

Ingress Controllers act as an entry point to the cluster and manage incoming external traffic. They rely on Ingress Resources to define routing rules for HTTP and HTTPS traffic to different Services within the cluster.

Network Policies enable you to control the traffic flow between Pods by defining rules for incoming and outgoing traffic. They allow you to enforce security measures and isolate Pods based on labels.

Containers within the same Pod can communicate with each other using localhost. This direct communication facilitates microservices architecture and avoids unnecessary network hops.

Securing Kubernetes clusters is of utmost importance to prevent unauthorized access and potential data breaches. Let's explore the various security aspects of Kubernetes.

RBAC is a crucial security feature that provides fine-grained control over who can access and perform operations within the cluster. RBAC defines roles and role bindings to grant permissions to users or service accounts.

Pod Security Policies (PSPs) help define a set of security requirements that Pods must adhere to before being scheduled on a node. PSPs are useful for enforcing security best practices and minimizing potential risks.

Network Policies, as mentioned earlier, control the flow of network traffic between Pods. By defining explicit rules, you can restrict communication and ensure a secure network environment.

Kubernetes Secrets allow you to store sensitive data, such as passwords and API keys, securely. Properly managing Secrets ensures that critical information remains confidential and protected.

To maintain a secure Kubernetes environment, you should follow these best practices:

Monitoring and logging are essential for understanding the health and performance of your Kubernetes cluster and applications.

Kubernetes provides various tools for monitoring cluster and application metrics:

Kubernetes clusters generate logs from various components and containers. Centralized logging solutions, such as Elasticsearch, Fluentd, and Kibana (EFK stack), or Loki and Grafana (Grafana Loki), can collect and store logs for easier troubleshooting.

Prometheus and Grafana integration offers powerful monitoring capabilities. Prometheus scrapes metrics from various endpoints, while Grafana visualizes these metrics in real-time dashboards.

APM tools like Jaeger and Zipkin can be integrated into your Kubernetes applications to trace and monitor distributed transactions, making it easier to troubleshoot performance issues.

Storage in Kubernetes can be complex, but it's essential for stateful applications. Let's explore various storage-related concepts and best practices.

Persistent Volumes (PVs) are cluster-wide storage volumes provisioned by the cluster administrator. Persistent Volume Claims (PVCs) are requests for storage resources by Pods.

Storage Classes are used to dynamically provision Persistent Volumes based on demand. Each Storage Class maps to a particular storage provider or type.

Dynamic Provisioning automatically creates Persistent Volumes when PVCs are created. It simplifies the process of managing storage in Kubernetes.

Stateful Applications have unique requirements, as they rely on stable, persistent storage and require ordered and consistent Pod creation. StatefulSets are designed to manage stateful applications in Kubernetes.

Kubernetes shines when deployed in production environments, where high availability, load balancing, monitoring, and scaling are critical.

To achieve high availability, ensure that your Kubernetes cluster has redundant master nodes, worker nodes, and network components. Load balancing distributes incoming traffic across multiple Pods, ensuring efficient resource utilization.

Disaster Recovery plans are essential for maintaining business continuity in case of cluster failures or data loss. Strategies may include data replication, regular backups, and multi-cluster setups.

Monitoring your applications in production is crucial for detecting performance issues and bottlenecks. Auto-scaling automatically adjusts the number of Pods based on demand to handle varying workloads.

Canary Deployments gradually roll out new versions of applications to a subset of users, ensuring the update is stable before full deployment. Blue/Green Deployments switch traffic between two identical environments with different versions, reducing downtime.

Jenkins and Spinnaker are popular tools for automating the deployment process. They can be integrated into your CI/CD pipeline to continuously deliver updates to the cluster.

As Kubernetes continues to evolve, new advanced features and concepts are emerging. Let's explore some of these cutting-edge topics.

Horizontal Pod Autoscaler automatically scales the number of Pods based on resource utilization, while Vertical Pod Autoscaler adjusts container resource requests and limits based on historical utilization.

Custom Resource Definitions (CRDs) extend the Kubernetes API, allowing you to define your custom resources. Custom Controllers can then watch and react to these custom resources, enabling custom application management.

Kubernetes Federation allows you to manage multiple clusters as a single entity, providing a unified view and management experience across clusters.

Multi-Cluster Kubernetes enables you to span your applications across multiple clusters, providing redundancy and geographic distribution for your workloads.

How to Answer: A Pod is the smallest deployable unit in Kubernetes, representing one or more containers that share the same network namespace and storage volumes. Candidates should explain that Pods are used to group containers that need to work together on the same host and communicate via localhost.

Sample Answer: "In Kubernetes, a Pod is the basic building block and represents one or more tightly coupled containers. These containers are scheduled together on the same worker node and share the same network namespace, enabling them to communicate via localhost. Pods are typically used to run containers that need to collaborate and share resources, such as web server and database containers for a web application."

What to Look For: Look for candidates who can clearly explain the concept of Pods, their purpose, and the benefits of using them to group related containers.

How to Answer: Deployments in Kubernetes manage the desired state of replicated Pods, allowing easy updates and rollbacks. Candidates should mention that Deployments use ReplicaSets to ensure the specified number of replicas are running. They should also highlight the rolling update strategy for seamless application updates.

Sample Answer: "In Kubernetes, Deployments are higher-level abstractions that ensure the desired state of replicated Pods. They use ReplicaSets to guarantee that a specified number of identical replicas are running at all times. Deployments are mainly used for managing stateless applications and handling rolling updates. The rolling update strategy enables us to update the application with new container images gradually, minimizing downtime and ensuring that the application remains available during the update process."

What to Look For: Seek candidates who can articulate the purpose of Deployments, their relationship with ReplicaSets, and their role in managing rolling updates.

How to Answer: Candidates should explain that Kubernetes Service is an abstraction that provides a stable IP address and DNS name to access a group of Pods. They should mention the different types of Services (ClusterIP, NodePort, and LoadBalancer) and their use cases.

Sample Answer: "Kubernetes Service is an abstraction that allows us to access a group of Pods using a stable IP address and DNS name. It enables seamless communication between different parts of our application within the cluster. There are three types of Services: ClusterIP, which is the default type and provides internal cluster-only access; NodePort, which exposes the Service on a static port on each node's IP address; and LoadBalancer, which provisions an external load balancer to distribute traffic to the Service."

What to Look For: Look for candidates who can explain the purpose of Kubernetes Service, its different types, and how it facilitates communication between Pods.

How to Answer: Candidates should mention that network policies in Kubernetes control the flow of traffic between Pods. They should explain that network policies use labels to match Pods and define rules for allowing or denying communication.

Sample Answer: "Network policies in Kubernetes enable us to define rules for controlling the flow of traffic between Pods. They use labels to select the Pods to which the policy applies. Network policies can allow or deny traffic based on source and destination Pod labels, protocols, and port numbers. By creating and applying network policies, we can enforce communication rules between different components of our application, enhancing security and isolating Pods as needed."

What to Look For: Seek candidates who can explain the concept of network policies, how they work, and their significance in securing and managing communication between Pods.

How to Answer: Candidates should describe Persistent Volumes as cluster-wide storage provisioned by administrators, while Persistent Volume Claims are requests for storage resources by Pods. They should mention that PVCs bind to PVs to provide data persistence for applications.

Sample Answer: "In Kubernetes, Persistent Volumes (PVs) are cluster-wide storage volumes provisioned by administrators. They are independent of Pods and can exist beyond the lifecycle of a Pod. On the other hand, Persistent Volume Claims (PVCs) are requests made by Pods for specific storage resources. When a PVC is created, Kubernetes binds it to an available PV that matches the PVC's requirements. This binding ensures that the Pod has access to the requested storage and enables data persistence for applications."

What to Look For: Look for candidates who can clearly differentiate between PVs and PVCs, their purpose, and how they work together to provide persistent storage in Kubernetes.

How to Answer: Candidates should explain that dynamic provisioning allows PVCs to be automatically created and bound to PVs when requested. They should mention that Storage Classes are used to define the dynamic provisioning behavior.

Sample Answer: "Dynamic provisioning in Kubernetes enables automatic creation and binding of Persistent Volumes to Persistent Volume Claims. When a PVC is created, Kubernetes uses Storage Classes to dynamically provision the required storage resources. Storage Classes define the storage parameters, such as the provisioner, reclaim policy, and access mode. When a PVC with a specific Storage Class is requested, Kubernetes automatically creates and binds a corresponding PV that matches the requirements, ensuring seamless and efficient provisioning of storage resources."

What to Look For: Seek candidates who understand the concept of dynamic provisioning, how Storage Classes are used, and the benefits of automating the creation of PVs based on PVC requests.

How to Answer: Candidates should mention that Kubernetes supports both horizontal and vertical scaling. They should explain that Horizontal Pod Autoscaler (HPA) automatically scales the number of Pods based on CPU utilization or custom metrics, while Vertical Pod Autoscaler (VPA) adjusts container resource requests and limits.

Sample Answer: "Kubernetes supports two types of scaling: horizontal and vertical. Horizontal Pod Autoscaler (HPA) automatically scales the number of Pods based on CPU utilization or custom metrics. When the resource utilization exceeds the defined threshold, HPA increases the number of replicas to handle the increased workload. On the other hand, Vertical Pod Autoscaler (VPA) adjusts the resource requests and limits of containers based on historical utilization. It ensures that containers have adequate resources to perform optimally without over- or under-provisioning."

What to Look For: Look for candidates who can explain the different scaling approaches supported by Kubernetes, their use cases, and the benefits they bring to managing application workloads.

How to Answer: Candidates should explain that rolling updates allow the seamless update of an application by gradually replacing old Pods with new ones. They should mention that Kubernetes automatically handles the process. For rollbacks, candidates should explain that Kubernetes allows you to revert to the previous version of a Deployment.

Sample Answer: "In Kubernetes, rolling updates are a strategy to update an application with new container images seamlessly. The update process is automated by Kubernetes, which gradually replaces old Pods with new ones. This approach ensures that the application remains available during the update, reducing downtime and the risk of service disruptions. For rollbacks, Kubernetes allows us to revert to the previous version of a Deployment if issues arise during the update, providing a safety net to maintain application stability."

What to Look For: Seek candidates who can explain the concept of rolling updates, how they are performed in Kubernetes, and the importance of having a rollback strategy for managing application updates.

How to Answer: Candidates should explain that RBAC in Kubernetes allows fine-grained control over user access and permissions. They should mention that RBAC is defined through Roles and RoleBindings or ClusterRoles and ClusterRoleBindings.

Sample Answer: "Role-Based Access Control (RBAC) in Kubernetes enables us to control user access and permissions with granularity. RBAC is defined using Roles and RoleBindings for specific namespaces or ClusterRoles and ClusterRoleBindings for the entire cluster. Roles define a set of rules for accessing specific resources, while RoleBindings associate these rules with users, groups, or service accounts. ClusterRoles and ClusterRoleBindings function similarly but apply cluster-wide. Implementing RBAC ensures that users have the appropriate level of access to perform their tasks and strengthens the security of the Kubernetes cluster."

What to Look For: Look for candidates who can explain the purpose of RBAC, how it is implemented in Kubernetes, and the significance of implementing RBAC best practices for security.

How to Answer: Candidates should mention that Kubernetes Secrets are used to securely store sensitive data, such as passwords and API keys. They should explain that ConfigMaps are used to decouple configuration data from application code.

Sample Answer: "In Kubernetes, we manage sensitive data and configurations using Secrets and ConfigMaps. Secrets allow us to securely store sensitive information, such as database passwords or API keys. They are Base64 encoded and encrypted at rest to ensure data confidentiality. On the other hand, ConfigMaps enable us to decouple configuration data from application code, simplifying management and allowing us to make configuration changes without modifying the container image. By using Secrets and ConfigMaps, we can ensure that sensitive data remains protected and that configurations are easily manageable."

What to Look For: Seek candidates who can explain the purpose of Secrets and ConfigMaps, how they are used, and the best practices for securely managing sensitive data and configurations.

How to Answer: Candidates should explain that securing container images involves following best practices during image creation and deployment. They should mention the importance of using trusted base images, regularly updating images, and scanning for vulnerabilities.

Sample Answer: "Securing container images is a critical aspect of Kubernetes security. To ensure image security, it's essential to start with trusted base images from reputable sources. Regularly updating container images to the latest versions is vital, as it includes security patches and bug fixes. Utilizing container image scanning tools can help identify vulnerabilities and ensure that images do not contain known security issues. Additionally, implementing image signing and verification mechanisms adds an extra layer of security to the container image supply chain. By adhering to these best practices, we can enhance the security of container images used in Kubernetes."

What to Look For: Seek candidates who can explain the significance of securing container images, the best practices involved, and how to maintain a secure image supply chain for Kubernetes deployments. Look for awareness of image scanning and verification mechanisms as part of the security process.

How to Answer: Candidates should describe CRDs as extensions of the Kubernetes API, enabling the definition of custom resources and behaviors. They should mention that Operators are Kubernetes controllers that automate tasks based on CRDs.

Sample Answer: "Custom Resource Definitions (CRDs) in Kubernetes allow us to extend the Kubernetes API and define our custom resources and their behaviors. With CRDs, we can create and manage our application-specific resources in the same way as built-in Kubernetes resources. Operators are Kubernetes controllers that leverage CRDs to automate complex application management tasks. By combining CRDs and Operators, we can achieve automation and consistency in managing our custom resources, making Kubernetes even more powerful and extensible."

What to Look For: Look for candidates who can explain the concept of CRDs and their relationship with Operators, showcasing an understanding of how CRDs enable custom resource management in Kubernetes.

How to Answer: Candidates should explain that high availability in Kubernetes is achieved by having redundant master nodes, worker nodes, and network components. They should mention that this redundancy ensures continuous service even in the face of failures.

Sample Answer: "To ensure high availability in Kubernetes clusters, we need to design for redundancy at multiple levels. Having redundant master nodes ensures that the control plane remains operational even if some masters fail. Similarly, having multiple worker nodes distributes the workload and provides resilience against node failures. Additionally, using a load balancer for external access to Services ensures that traffic is evenly distributed and no single node becomes a single point of failure. By implementing these redundancy measures, we can achieve high availability and maintain continuous service even in the face of failures."

What to Look For: Seek candidates who can articulate the importance of high availability in Kubernetes, the redundancy measures required at different levels, and the impact of ensuring continuous service.

How to Answer: Candidates should mention that Kubernetes provides various monitoring tools, such as Metrics Server and Prometheus. They should highlight the importance of monitoring resource utilization, performance metrics, and application health.

Sample Answer: "Monitoring Kubernetes clusters and applications is critical to ensuring their health and performance. Kubernetes offers various monitoring tools, such as Metrics Server and Prometheus. Metrics Server collects resource utilization data from nodes and Pods, enabling us to monitor CPU and memory usage. Prometheus is a more powerful monitoring tool that stores time-series data, allowing us to query and visualize a wide range of performance metrics. By monitoring resource usage, performance, and application health, we can proactively detect and address potential issues to maintain a reliable and performant Kubernetes environment."

What to Look For: Look for candidates who can explain the significance of monitoring Kubernetes clusters and applications, the monitoring tools available in Kubernetes, and the key metrics to monitor for performance and health.

How to Answer: Candidates should mention that application and data backups in Kubernetes can be achieved through various methods, such as Volume Snapshots and etcd backups. They should highlight the importance of regular backups to protect against data loss.

Sample Answer: "In Kubernetes, handling application and data backups is crucial to protect against data loss and ensure business continuity. For persistent data, we can use Volume Snapshots to create point-in-time copies of Persistent Volumes. These snapshots can then be stored in an external storage system for safekeeping. Additionally, backing up the etcd data store is essential for disaster recovery. Regularly backing up the etcd database ensures that we can restore the entire cluster's state if necessary. By implementing these backup strategies, we can safeguard our applications and data in Kubernetes."

What to Look For: Seek candidates who can explain the importance of application and data backups in Kubernetes, the methods available for backups, and the significance of regular backups for disaster recovery.

Looking to ace your next job interview? We've got you covered! Download our free PDF with the top 50 interview questions to prepare comprehensively and confidently. These questions are curated by industry experts to give you the edge you need.

Don't miss out on this opportunity to boost your interview skills. Get your free copy now!

As with any technology, Kubernetes may present challenges and issues that need to be addressed. Here are some common challenges and troubleshooting techniques:

When applications misbehave, debugging can be challenging in a distributed environment. Techniques like kubectl logs, kubectl exec, and application-specific logs can be invaluable for troubleshooting.

Optimizing performance requires monitoring and identifying resource bottlenecks. Tools like Prometheus and Grafana can help you identify performance issues and bottlenecks.

Upgrading Kubernetes clusters or applications requires careful planning and testing. Similarly, rollbacks need to be performed with caution to avoid potential data loss or downtime.

Misconfigured networking or DNS can lead to communication failures between Pods and Services. Proper network policies and troubleshooting techniques are vital for resolving such issues.

Managing Persistent Volumes and Persistent Volume Claims can be complex. Understanding storage classes, dynamic provisioning, and troubleshooting storage-related problems is essential for maintaining data integrity.

Kubernetes is an ever-evolving technology, and its future is exciting. Here's what you can look forward to:

The Kubernetes community continues to grow, contributing to an extensive ecosystem of tools, extensions, and solutions.

Expect more advanced features and enhancements, such as improved scalability, better support for stateful applications, and simplified management of complex clusters.

Serverless computing and Kubernetes are becoming more intertwined, offering a scalable, event-driven approach to running applications in containers.

Kubernetes is also making its way into edge computing scenarios, enabling efficient management and deployment of applications at the edge of the network.

This guide has covered the top Kubernetes interview questions, providing valuable insights into the fundamental concepts, networking, storage, management, scaling, security, and advanced topics related to Kubernetes. As a candidate preparing for Kubernetes interviews, you now have a solid understanding of the key areas that interviewers may explore during the selection process.

Throughout this guide, we have offered guidance on how to effectively answer each question, providing tips, strategies, and best practices to help you showcase your knowledge and expertise. The sample answers provided serve as reference points, offering inspiration on how to structure your responses and demonstrate the desired qualities and competencies sought by hiring managers.

To stand out during Kubernetes interviews, remember the following key points:

By combining technical expertise with a practical understanding of Kubernetes and its best practices, you can confidently navigate Kubernetes interviews and impress potential employers with your ability to manage containerized applications effectively.

Remember, successful Kubernetes professionals continue to learn, explore, and gain hands-on experience in real-world scenarios. Stay curious, keep experimenting with Kubernetes clusters, and actively engage with the vibrant Kubernetes community to expand your knowledge.