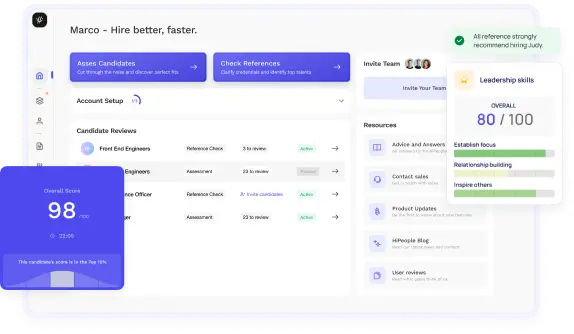

Streamline hiring with effortless screening tools

Optimise your hiring process with HiPeople's AI assessments and reference checks.

Are you ready to ace your Kafka interview and showcase your expertise in real-time data processing? Whether you're an aspiring candidate eager to demonstrate your Kafka knowledge or an employer seeking top talent in the field, mastering Kafka interview questions is essential. In this guide, we'll delve into the top Kafka interview questions, covering everything from Kafka's architecture and core concepts to advanced topics like security, scalability, and integration with other technologies. By exploring these questions in detail, you'll gain a deeper understanding of Kafka and equip yourself with the knowledge and confidence needed to excel in Kafka interviews. So, let's dive in and unlock the insights you need to succeed in the world of Kafka.

Apache Kafka is a distributed event streaming platform designed for building real-time data pipelines and streaming applications. It was initially developed by LinkedIn and later open-sourced by the Apache Software Foundation. Kafka provides a highly scalable, fault-tolerant, and durable messaging system that enables high-throughput, low-latency data processing at scale. Its architecture is based on a distributed commit log, where data is stored as immutable logs distributed across multiple brokers. Kafka's pub-sub model allows producers to publish data to topics, and consumers to subscribe to topics and process data in real-time.

How to Answer:Candidates should provide a concise definition of Apache Kafka, highlighting its key features such as its distributed nature, fault tolerance, and high throughput. They should contrast Kafka with traditional messaging systems like RabbitMQ or ActiveMQ, emphasizing Kafka's disk-based persistence and publish-subscribe architecture.

Sample Answer:"Apache Kafka is a distributed streaming platform designed for building real-time streaming data pipelines and applications. Unlike traditional messaging systems, Kafka persists data to disk, allowing for fault tolerance and durability. It uses a publish-subscribe model and is highly scalable, making it ideal for handling large volumes of data."

What to Look For:Look for candidates who demonstrate a clear understanding of Kafka's core concepts and can effectively differentiate it from traditional messaging systems. Strong candidates will articulate the advantages of Kafka's architecture and its suitability for streaming data applications.

How to Answer:Candidates should identify and describe the main components of Kafka, including producers, consumers, brokers, topics, partitions, and consumer groups. They should explain how these components interact to facilitate message processing and storage in Kafka clusters.

Sample Answer:"The key components of Kafka architecture include producers, which publish messages to topics, and consumers, which subscribe to these topics to consume messages. Brokers are Kafka servers responsible for message storage and replication. Topics are logical channels for organizing messages, divided into partitions for scalability. Consumer groups consist of consumers that share the load of processing messages within a topic."

What to Look For:Evaluate candidates based on their ability to articulate the roles and interactions of Kafka's core components. Strong answers will demonstrate a clear understanding of how producers, consumers, brokers, topics, partitions, and consumer groups contribute to Kafka's distributed messaging system.

How to Answer:Candidates should discuss various scenarios where Kafka is commonly used, such as real-time stream processing, log aggregation, event sourcing, and data integration. They should provide examples of industries or applications that benefit from Kafka's capabilities.

Sample Answer:"Apache Kafka is used in a wide range of use cases, including real-time stream processing for analyzing data as it arrives, log aggregation for centralized logging in distributed systems, event sourcing for maintaining immutable event logs, and data integration for connecting disparate systems and applications."

What to Look For:Seek candidates who can effectively articulate the diverse use cases for Kafka across different industries and domains. Look for specific examples and insights into how Kafka addresses the challenges of real-time data processing and integration.

How to Answer:Candidates should describe Kafka's mechanisms for fault tolerance and high availability, such as data replication, leader-election, and distributed commit logs. They should explain how Kafka handles node failures and ensures data durability.

Sample Answer:"Kafka achieves fault tolerance and high availability through data replication, where messages are replicated across multiple brokers within a cluster. It uses a leader-follower replication model and leader-election mechanism to ensure continuous operation even in the event of broker failures. Kafka's distributed commit log design guarantees data durability by persisting messages to disk before acknowledgment."

What to Look For:Evaluate candidates based on their understanding of Kafka's fault tolerance mechanisms and their ability to explain how Kafka maintains data consistency and availability under various failure scenarios. Look for insights into Kafka's replication strategies and leadership election process.

How to Answer:Candidates should discuss performance optimization techniques such as batch processing, partitioning, message compression, and tuning Kafka configurations like batch size, linger time, and buffer size. They should explain how these strategies improve Kafka's throughput and latency.

Sample Answer:"To optimize Kafka performance for high throughput, you can employ batch processing to reduce overhead and improve efficiency. Partitioning allows you to distribute load across multiple brokers, while message compression reduces network bandwidth usage. Tuning Kafka configurations, such as increasing batch size and adjusting linger time, can also enhance throughput by minimizing I/O operations and maximizing resource utilization."

What to Look For:Look for candidates who demonstrate a deep understanding of Kafka's performance characteristics and can propose effective optimization strategies. Strong answers will include specific techniques and configuration adjustments tailored to improving Kafka's throughput and latency.

How to Answer:Candidates should explain how Kafka handles backpressure by controlling the rate of message consumption and using mechanisms like consumer group rebalancing and consumer offsets. They should discuss strategies for preventing consumer overload, such as implementing throttling and setting appropriate consumer configurations.

Sample Answer:"Kafka handles backpressure by allowing consumers to control their consumption rate through consumer offsets and commit intervals. Consumer group rebalancing ensures that partitions are evenly distributed among consumers, preventing overload on individual consumers. Additionally, implementing throttling mechanisms and adjusting consumer configurations, such as max.poll.records and max.poll.interval.ms, can help prevent excessive message processing and consumer lag."

What to Look For:Evaluate candidates based on their understanding of Kafka's mechanisms for managing backpressure and consumer overload. Look for insights into how Kafka distributes message processing across consumers and mitigates the risk of consumer lag through effective configuration management.

How to Answer:Candidates should discuss monitoring tools and frameworks such as Kafka Manager, Confluent Control Center, Prometheus, and Grafana. They should explain how these tools provide visibility into Kafka cluster health, performance metrics, and operational insights.

Sample Answer:"There are several tools available for monitoring Kafka clusters, including Kafka Manager, which offers cluster management and monitoring capabilities, and Confluent Control Center, which provides centralized monitoring and management for Kafka environments. Additionally, you can use monitoring solutions like Prometheus and Grafana to collect and visualize Kafka metrics, enabling proactive performance monitoring and troubleshooting."

What to Look For:Look for candidates who are familiar with monitoring tools and practices for Kafka and can discuss how these tools contribute to effective cluster management. Strong answers will highlight the importance of real-time monitoring and actionable insights for ensuring Kafka's reliability and performance.

How to Answer:Candidates should explain techniques for ensuring data consistency and integrity in Kafka, such as idempotent producers, transactional messaging, and data retention policies. They should discuss how these mechanisms help maintain data quality and reliability in Kafka clusters.

Sample Answer:"To ensure data consistency and integrity in Kafka, you can use idempotent producers to guarantee that messages are delivered exactly once, even in the event of retries or failures. Kafka also supports transactional messaging, allowing producers to atomically publish messages across multiple partitions. By defining appropriate data retention policies and replication factors, you can further enhance data durability and reliability in Kafka clusters."

What to Look For:Evaluate candidates based on their understanding of data consistency and integrity mechanisms in Kafka and their ability to propose effective strategies for maintaining data quality. Look for insights into Kafka's support for idempotent producers, transactional messaging, and data retention policies.

How to Answer:Candidates should discuss Kafka's integration capabilities with popular systems and frameworks such as Apache Spark, Apache Flink, and Apache Storm for stream processing, as well as connectors for databases like Apache Cassandra and Elasticsearch. They should explain how Kafka's ecosystem facilitates seamless data integration and interoperability.

Sample Answer:"Kafka integrates with various systems and frameworks through its ecosystem of connectors and APIs. For stream processing, Kafka can be seamlessly integrated with Apache Spark, Apache Flink, and Apache Storm, enabling real-time data analysis and processing. Kafka Connect provides connectors for integrating with databases like Apache Cassandra and Elasticsearch, allowing bidirectional data movement between Kafka and other data stores."

What to Look For:Look for candidates who demonstrate knowledge of Kafka's integration capabilities and its ecosystem of connectors and APIs. Strong answers will highlight specific use cases and examples of Kafka's interoperability with different systems and frameworks for data integration and processing.

How to Answer:Candidates should discuss security features and best practices for securing Kafka deployments, such as SSL/TLS encryption, authentication mechanisms like SASL and Kerberos, and access control lists (ACLs). They should explain how these measures protect data confidentiality and prevent unauthorized access to Kafka clusters.

Sample Answer:"To ensure security in Kafka deployments, you can enable SSL/TLS encryption to encrypt data in transit between clients and brokers, protecting it from interception or tampering. Kafka supports authentication mechanisms like SASL and Kerberos for verifying client identities and controlling access to clusters. Additionally, you can use access control lists (ACLs) to define fine-grained permissions for topics and operations, ensuring that only authorized users and applications can interact with Kafka."

What to Look For:Evaluate candidates based on their understanding of Kafka's security features and their ability to propose comprehensive security measures for Kafka deployments. Look for insights into encryption, authentication, and access control mechanisms, as well as considerations for securing data both in transit and at rest within Kafka clusters.

How to Answer:Candidates should explain the role of serializers and deserializers in Kafka for converting data between binary format and readable format. They should discuss supported serialization formats like Avro, JSON, and Protobuf, and their implications on performance and compatibility.

Sample Answer:"Kafka uses serializers and deserializers (SerDes) to encode and decode data between its binary representation and human-readable format. Supported serialization formats include Avro, JSON, and Protobuf, each offering advantages in terms of schema evolution, performance, and compatibility with different programming languages and frameworks."

What to Look For:Look for candidates who demonstrate an understanding of data serialization concepts and their significance in Kafka's message processing pipeline. Strong answers will discuss the trade-offs between serialization formats and their impact on data compatibility, schema evolution, and performance.

How to Answer:Candidates should describe techniques for customizing data serialization in Kafka, such as implementing custom serializers and deserializers, using schema registries, and defining serialization configurations in producer and consumer applications.

Sample Answer:"To customize data serialization in Kafka, you can implement custom serializers and deserializers tailored to your data formats and requirements. Additionally, you can use schema registries like Confluent Schema Registry to manage schema evolution and compatibility for serialized data. Configuring serialization properties in producer and consumer applications allows you to specify serialization formats, encoding options, and schema registry endpoints."

What to Look For:Evaluate candidates based on their familiarity with techniques for customizing data serialization in Kafka and their ability to explain how these techniques support data interoperability, schema evolution, and performance optimization. Look for practical examples and insights into implementing custom serializers and deserializers.

How to Answer:Candidates should explain Kafka's replication protocol and mechanisms for maintaining data consistency across replicas, such as leader-follower replication, ISR (In-Sync Replica) sets, and follower synchronization. They should discuss how Kafka handles replica failures and ensures consistency during leader elections.

Sample Answer:"Kafka ensures data consistency across replicated partitions through leader-follower replication, where each partition has one leader and multiple followers. ISR (In-Sync Replica) sets consist of replicas that are up-to-date with the leader, ensuring data consistency and durability. Kafka uses follower synchronization to replicate data from leaders to followers, maintaining consistency even in the presence of network partitions or replica failures."

What to Look For:Look for candidates who demonstrate an understanding of Kafka's replication protocol and its role in ensuring data consistency and durability. Strong answers will discuss mechanisms for handling replica synchronization, leader elections, and fault tolerance in Kafka clusters.

How to Answer:Candidates should discuss disaster recovery strategies for Kafka deployments, such as multi-datacenter replication, data mirroring, and regular backups. They should explain how these strategies mitigate the risk of data loss and ensure business continuity in the event of failures or disasters.

Sample Answer:"For disaster recovery in Kafka, you can implement multi-datacenter replication to replicate data across geographically distributed clusters, reducing the impact of localized failures or disasters. Data mirroring allows you to mirror data between clusters in real-time, providing redundancy and failover capabilities. Additionally, regular backups of Kafka data and configuration files enable quick restoration of services in the event of catastrophic failures or data corruption."

What to Look For:Evaluate candidates based on their understanding of disaster recovery principles and their ability to propose effective strategies for ensuring data resilience and business continuity in Kafka deployments. Look for insights into multi-datacenter replication, data mirroring, and backup and restore procedures.

How to Answer:Candidates should explain Kafka Streams, a client library for building stream processing applications with Kafka, and its key features such as stateful processing, windowing, and fault tolerance. They should discuss how Kafka Streams integrates with Kafka and simplifies the development of real-time data processing pipelines.

Sample Answer:"Kafka Streams is a client library for building stream processing applications directly against Kafka. It provides abstractions for stateful processing, windowing, and event-time processing, allowing developers to implement complex data processing logic with ease. Kafka Streams integrates seamlessly with Kafka clusters and leverages Kafka's fault tolerance mechanisms for high availability and data durability."

What to Look For:Look for candidates who demonstrate familiarity with Kafka Streams and its capabilities for building stream processing applications. Strong answers will highlight the advantages of using Kafka Streams for real-time data processing, including its integration with Kafka and support for fault tolerance and state management.

Looking to ace your next job interview? We've got you covered! Download our free PDF with the top 50 interview questions to prepare comprehensively and confidently. These questions are curated by industry experts to give you the edge you need.

Don't miss out on this opportunity to boost your interview skills. Get your free copy now!

Apache Kafka is a distributed streaming platform designed to handle real-time data feeds with high throughput and fault tolerance. Understanding the basics of Kafka is essential for both employers evaluating candidates' skills and candidates preparing for Kafka interviews.

Kafka's architecture is distributed and follows a client-server model. It comprises several key components working together to ensure data reliability and scalability:

Kafka follows a publish-subscribe (pub-sub) messaging model, where producers publish data records to topics, and consumers subscribe to topics to receive and process those records. This decoupled architecture enables asynchronous communication between producers and consumers, allowing for real-time data processing and analysis.

Kafka plays a pivotal role in enabling real-time data streaming and processing for various use cases, including:

By providing a scalable, fault-tolerant, and high-throughput platform for data streaming and processing, Kafka has become a foundational component in modern data architectures. Understanding Kafka's role in real-time data processing is crucial for both employers seeking to leverage Kafka for their data infrastructure and candidates aiming to demonstrate their proficiency in Kafka during interviews,

Setting up and configuring Kafka clusters is a critical aspect of leveraging Kafka effectively for real-time data processing. We'll take you through the steps required to install Kafka on different platforms, optimize Kafka clusters for performance, understand Kafka configurations and properties, and implement best practices for deployment and maintenance.

Installing Kafka on various platforms, such as Linux, Windows, or macOS, involves similar procedures but may require platform-specific configurations. Here's a general overview of the steps:

KAFKA_HOME and update the system PATH to include Kafka binaries.server.properties and zookeeper.properties to suit your requirements.Optimizing Kafka clusters for performance involves considering various factors related to hardware, network, and configuration settings:

Kafka provides extensive configuration options to fine-tune its behavior and performance. Understanding these configurations is essential for optimizing Kafka clusters and addressing specific use case requirements:

Deploying and maintaining Kafka clusters require adherence to best practices to ensure reliability, scalability, and ease of management:

By following these guidelines and best practices, you can set up, configure, and maintain Kafka clusters effectively to meet your organization's real-time data processing needs while ensuring reliability, scalability, and performance.

Kafka producers play a crucial role in publishing data to Kafka topics, facilitating the flow of real-time information within Kafka clusters. Let's explore common use cases for Kafka producers, the process of configuring and writing data to Kafka topics, and strategies for error handling and message delivery guarantees.

Kafka producers are versatile components that find applications across various domains and industries. Some common use cases for Kafka producers include:

Configuring Kafka producers involves specifying various parameters to control their behavior, such as the bootstrap servers, message serialization format, and message delivery semantics. Here's an overview of the steps involved in configuring and writing data to Kafka topics:

Properties props = new Properties();

props.put("bootstrap.servers", "kafka1:9092,kafka2:9092");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Producer<String, String> producer = new KafkaProducer<>(props);

ProducerRecord<String, String> record = new ProducerRecord<>("my-topic", "key", "value");

producer.send(record, (metadata, exception) -> {

if (exception != null) {

System.err.println("Error publishing message: " + exception.getMessage());

} else {

System.out.println("Message published successfully: " + metadata.topic() + ", partition " + metadata.partition() + ", offset " + metadata.offset());

}

});

producer.close();

Kafka producers provide configurable options for error handling and message delivery guarantees, allowing developers to choose the appropriate level of reliability for their use case:

By configuring these parameters appropriately and handling errors effectively, Kafka producers can ensure reliable and robust message publishing to Kafka topics, meeting the requirements of various use cases with different levels of reliability and consistency.

Kafka consumers are essential components for subscribing to Kafka topics and processing data records published by producers. Let's explore the different types of Kafka consumers, strategies for scaling consumer applications, and techniques for handling message processing failures effectively.

Kafka consumers can be categorized into different types based on their characteristics and behavior:

Scaling Kafka consumers involves deploying multiple consumer instances and distributing data processing across them effectively. Here are some strategies for scaling Kafka consumers:

Handling message processing failures is crucial for ensuring data integrity and application reliability. Kafka consumers may encounter various types of failures during message processing, including network errors, data parsing errors, or application crashes. Here are some techniques for handling message processing failures effectively:

By implementing these strategies and techniques, Kafka consumers can effectively scale to handle large volumes of data, ensure reliable message processing, and maintain data consistency and integrity even in the face of failures and disruptions.

Kafka Streams is a powerful library in Apache Kafka for building real-time stream processing applications. In this section, we'll explore the Kafka Streams API, its use cases, advantages, and how to implement stream processing applications using Kafka Streams.

The Kafka Streams API provides a lightweight, scalable, and fault-tolerant framework for building stream processing applications directly within Kafka. It allows developers to process data in real-time, enabling low-latency and near real-time analytics, transformations, and aggregations. The Kafka Streams API is integrated with Kafka, eliminating the need for external stream processing frameworks and simplifying application development.

Kafka Streams offers several advantages for real-time data processing, making it suitable for a wide range of use cases:

Developing stream processing applications with Kafka Streams involves the following steps:

Properties config = new Properties();

config.put(StreamsConfig.APPLICATION_ID_CONFIG, "my-streams-app");

config.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, "kafka1:9092,kafka2:9092");

config.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

config.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());

StreamsBuilder builder = new StreamsBuilder();

KStream<String, String> input = builder.stream("input-topic");

KStream<String, String> transformed = input.mapValues(value -> value.toUpperCase());

transformed.to("output-topic");

KafkaStreams streams = new KafkaStreams(builder.build(), config);

streams.start();

By leveraging the Kafka Streams API, developers can build robust, scalable, and fault-tolerant stream processing applications directly within Kafka, enabling real-time analytics, event-driven microservices, and IoT data processing with ease and efficiency.

Monitoring and maintaining Kafka clusters are essential tasks to ensure their reliability, performance, and availability.

Monitoring Kafka clusters provides insights into their performance, health, and resource utilization, allowing administrators to detect issues, optimize configurations, and ensure smooth operation. Key reasons why monitoring Kafka clusters is crucial include:

Several tools and techniques are available for monitoring Kafka clusters and ensuring their performance and health:

Operating Kafka clusters comes with various challenges, but with the right tools and practices, these challenges can be addressed effectively:

By adopting a proactive approach to monitoring and operations, administrators can ensure the stability, performance, and reliability of Kafka clusters, enabling seamless data processing and streamlining operations. Regular monitoring, proper tooling, and effective troubleshooting practices are essential for maintaining Kafka clusters in production environments.

Integrating Kafka with other technologies is essential for building robust and scalable data processing pipelines. We'll explore how Kafka integrates with popular big data frameworks like Apache Spark and Apache Flink, leveraging Kafka Connect for seamless data integration, and best practices for integrating Kafka into existing data pipelines.

Apache Spark and Apache Flink are two widely used big data processing frameworks that can seamlessly integrate with Kafka:

Kafka Connect is a framework for building and running connectors that stream data between Kafka and other systems in a scalable and fault-tolerant manner:

Integrating Kafka into existing data pipelines requires careful planning and consideration of several factors:

By following these best practices and leveraging Kafka's integration capabilities with other technologies, organizations can build robust, scalable, and efficient data processing pipelines that meet their real-time data processing requirements while seamlessly integrating with existing data infrastructure and systems.

Delving deeper into Kafka, we encounter advanced concepts critical for understanding its security, data consistency guarantees, and its expansive ecosystem. Let's explore Kafka's security measures, the elusive exactly-once semantics, and the rich ecosystem surrounding Kafka.

Ensuring the security of Kafka clusters is paramount for protecting sensitive data and preventing unauthorized access. Kafka provides robust security features:

Achieving exactly-once semantics in distributed systems is notoriously challenging due to the potential for duplicates and out-of-order processing. Kafka offers transactional capabilities to ensure exactly-once message processing:

The Kafka ecosystem comprises a diverse set of projects and tools that extend Kafka's functionality and integrate it with other technologies:

Understanding Kafka's security features, exactly-once semantics, and its rich ecosystem of projects is crucial for deploying Kafka clusters securely, ensuring data consistency, and leveraging its full potential for building real-time data processing pipelines and applications. By mastering these advanced concepts, organizations can harness Kafka's capabilities to meet their evolving data processing needs and drive innovation in their data architectures.

Mastering Kafka interview questions is not just about memorizing answers but understanding the underlying concepts and principles. By familiarizing yourself with Kafka's architecture, key components, and real-world use cases, you'll be well-equipped to tackle any interview scenario with confidence. Remember to stay updated with the latest developments in Kafka and its ecosystem, as technology is constantly evolving. Additionally, practice problem-solving and critical thinking skills to demonstrate your ability to apply Kafka concepts to practical scenarios. With dedication, preparation, and a solid understanding of Kafka, you'll be well on your way to acing your next Kafka interview and advancing your career in the dynamic field of real-time data processing.

In the competitive landscape of today's job market, employers are actively seeking candidates who possess strong Kafka skills and a deep understanding of distributed systems and event streaming. By showcasing your expertise in Kafka interview questions, you not only demonstrate your technical proficiency but also your ability to innovate and solve complex challenges. As organizations increasingly rely on Kafka for real-time data processing and analytics, professionals with Kafka skills are in high demand across various industries. Whether you're a seasoned Kafka developer or a newcomer to the field, honing your Kafka knowledge and mastering interview questions will undoubtedly open doors to exciting career opportunities and pave the way for success in the fast-paced world of data engineering and stream processing.